The second step in a Search Engine Optimisation project is to get some metrics on the state of the site before the Optimisation project proper commences. If you missed the first step in this Search Engine Optimisation project, you can see it here.

To achieve this we will first need to carry out a benchmarking of the site’s current web traffic statistics to enable tracking of your ROI. There are several web stats analysis applications available for this task. The main free ones are Webalizer, AwStats and Analog and I find that using a combination of these tools is better than relying on any one. Mostly at this point we are looking for the amount of traffic to the site – we will be comparing this against the amount of traffic coming to the site as the project progresses.

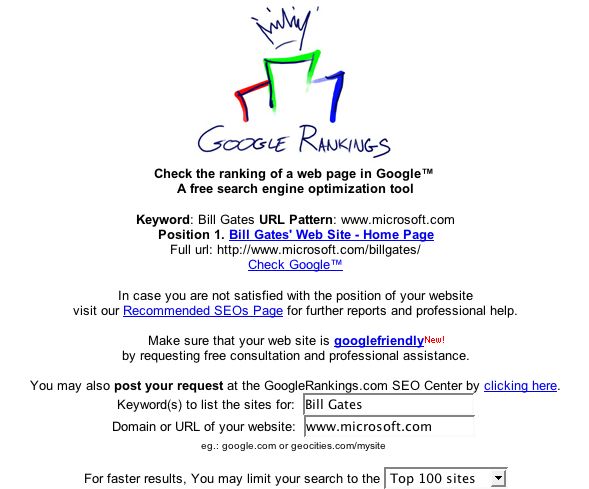

Secondly, we will want to examine search engine rankings for various likely keywords or keyphrases target clients might use to find the site. Googlerankings, a website which allows you to look up the Google Search Engine Rank Position (SERP) of any site, for any keyword or phrase, is the main tool to use for this stage of the process. In the image below we can see that, not unsurprisingly, Microsoft.com have the no.1 Google SERP for the keyphrase Bill Gates:

To perform this step, however, we will need a list of keywords/phrases to use as our baseline. Some of these will be gleaned from the web traffic statistics in the previous step (what words and expressions have historically been used to find the site) and some will be ones which you want the site to be found by.

And finally, a technical audit of the existing site needs to be carried out. This audit needs to flag any issues that may currently be harming search engine ranking (i.e. Frames, Dynamic uri’s, Flash, image maps for navigation, and/or javascript for navigation). Any of these which are found need to be documented and plans put in place for their replacement with search engine friendly alternatives.

I will be writing up the next step in this project in the next couple of days.

If you enjoyed this post, make sure you subscribe to my RSS feed!

Tom,

I’m new to the whole area of SEO, so forgive me if my question sounds stupid!

Reading your articles on SEO, you mentioned Google Rankings. On Google’s FAQ page I noticed the following:

“Automated rank checking programs violate Google’s Terms of Service. They use server resources that should be spent on answering user requests. We strongly request that you not use rank checking programs to check your position on Googleâ€?

Is this a reference to services such as Google Rankings, or am I missing the point?

How much does webserver availability matter in SEO? I have my homepage on a server that has a DSL modem that sometimes fails for a few hours, and I feel I have difficulties being found on Google. Could this be the issue?

Almost certainly Jonathan,

it isn’t possible to say for definite as the Google algorithm is a closely guarded secret but it would stand to reason that a site’s availability would be one of the factors they would use to rank a site.

Thanks for the answer.

Pagerank is surely a secret algorithm, but common sense tells me that the modem is the culprit here. I will invest some money in getting a new one.

Sincerely,

Jonathan Fors

http://etnoy.broach.se

Good tutorial,

FYI, google is now working on a new ranking algorythm/program called the IndyRank, it uses a combination of borg technologies and spacscoring to rank the websites.

Good luck, Ruslan

The page rank algorithm is relatively simple yet brilliant and is available for all to see:

Page Rank paper by Larry Paige and Sergey Brin completed while at Stanford University. Obviously there’s a lot more to Google than the Page Rank algorithm.

Roger, the article is very old.

Garry Your right it’s from 2005. Better search for something new on this blog.

Strange to see how much SEO has changed since 2005. Today its mostly about links and content.

The method is old, it’s one of the basic methods, but it works fine.

Thanks for the article anyways.